LET'S CREATE VALUABLE AI SOLUTIONS WITH AIGENCY

Design & Develop robust and trustworthy Machine Learning models

AI has already begun to change the way businesses operate. It can help you automate routine tasks, improve customer service, and make better decisions faster. And that’s just the beginning. With the help of AI, businesses can reach new heights and discover new opportunities they never would have thought possible.

Services

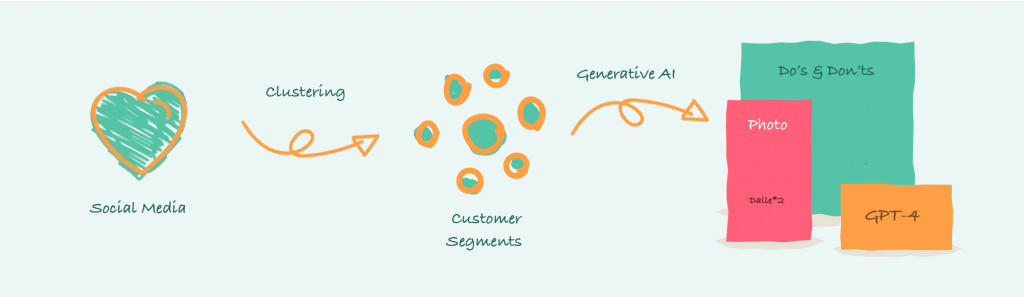

Step into the world of Large Language Models and Generative Models.

Start with Generative AI, like Stable Diffusion or GPT-4 and read about a real-life reference case and check out our Advanced ChatGPT Workshop.

Our Services

We provide a wide range of AI services

BUILD MODELS

Design & Develop robust and trustworthy Machine Learning models

MLOPS

Setting up a platform for continuous model development & optimization

AI Strategy

If you’re looking to take your business to the next level, you need an AI strategy because AI has become essential for companies. It’s essential to have a plan for how to use it in your business. By having a strategy for using AI, businesses can stay ahead of the competition and improve their bottom line.

TRUSTED BY GLOBAL BRANDS

Why to choose Aigency?

We’re the leading experts in Artificial Intelligence and Machine Learning.

- Awarded expertise like Microsoft AI MVP Awards

- Responsible AI workflow based on scientific research

- We believe that no machine learning model can go without Explainable AI

- We can train, deploy, monitor and maintain the most complex models

Productivity

Speed up with our accelerators

Accelerators are designed to speed up machine learning development. They dothis by providing pre–configured environments and libraries that allow data scientists and ML engineers to get up and running quickly.

Contact

LET'S TALK AI

Latest Cases

Logiqs - AI Design Week provides new insights and practical tools